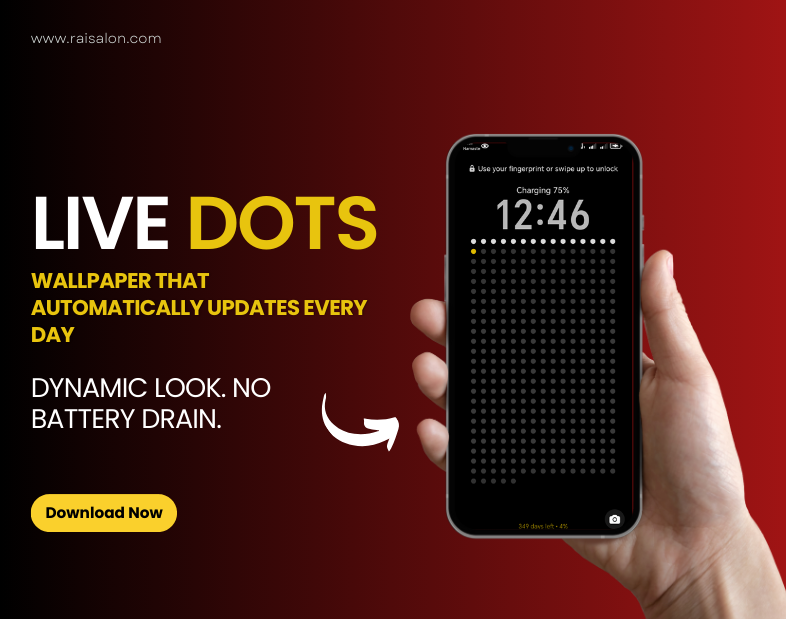

In a world where time seems to slip through our fingers, Live Dots offers a unique and beautiful way to visualize your year’s progress right on your phone’s wallpaper. This innovative Android live wallpaper app turns each day of the year into a visual dot, creating a stunning calendar that automatically updates daily to keep you mindful of time’s passage. What Makes Live Dots Special? Live Dots is more than just a wallpaper—it’s a daily reminder to make every moment count. The app displays a minimalist grid of dots representing every single day of the year, with each dot telling a story about where you are in your annual journey. Automatic Daily Updates The standout feature of Live Dots is its intelligent automatic update system. Once you set your wallpaper, the app works silently in the background to refresh your wallpaper once per day at midnight. This means: The app uses Android’s WorkManager to schedule these daily updates efficiently, ensuring your calendar stays current without draining your battery or requiring constant app launches. Stunning Visual Design Minimalist Dot Grid Layout Live Dots presents your year as an elegant grid of 365 dots (or 366 for leap years), arranged in a clean 15-column by 25-row layout. Each dot represents a single day: White dots – Days you’ve already lived this year Accent-colored dot – Today (the current day) Dark gray dots – Days yet to come This simple yet powerful visualization lets you see at a glance how much of the year has passed and how many days remain. How It Works 1. Install and launch Live Dots 2.Choose your accent color from four beautiful options 3.Preview your wallpaper to see how it looks 4.Apply to home screen, lock screen, or both 5.Confirm automatic daily updates 6.Relax – Your wallpaper now updates automatically every day at midnight! The Philosophy Behind Live Dots Time is our most precious resource, yet it’s easy to lose track of days, weeks, and months. Live Dots was created to help you: –Visualize time’s passage in a tangible way –Stay present and mindful of each day –Appreciate the time you have –Motivate yourself to make each day count Every time you unlock your phone, you’ll see a beautiful reminder of where you are in your year’s journey—not to stress you out, but to inspire you to live intentionally. Conclusion Live Dots is more than a wallpaper app—it’s a daily companion that helps you stay connected to the rhythm of your year. With its automatic daily updates, stunning visual design, customizable colors, and privacy-first approach, it’s the perfect blend of beauty and functionality. Transform your phone screen into a meaningful year tracker. Download Live Dots today and make every day visible.