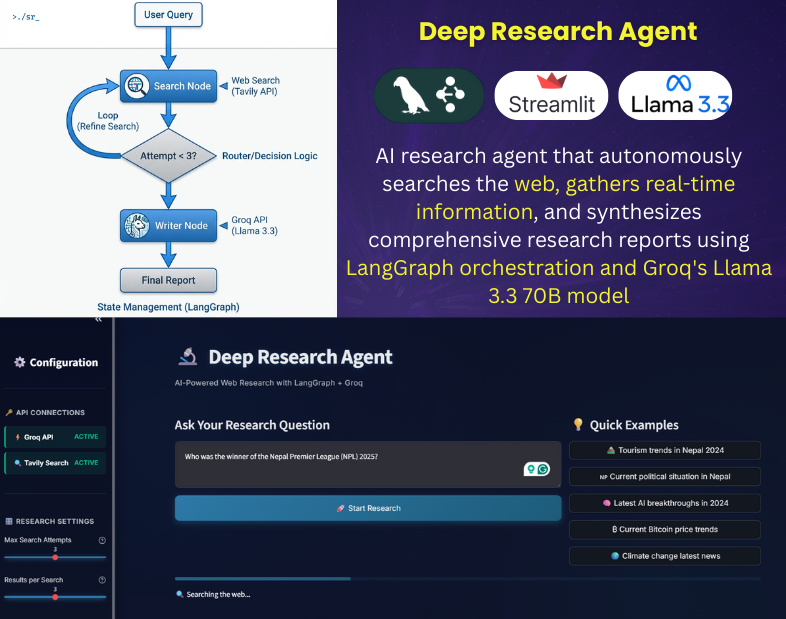

Deep Research Agent – Autonomous AI Research Assistant

Abstract As Large Language Models (LLMs) evolve from simple text generators to reasoning engines, the focus of AI development has shifted toward agentic workflows—systems capable of autonomous planning, tool use, and self-correction. To explore the efficacy of modern orchestration frameworks, I engineered the Deep Research Agent: a fully autonomous system designed to perform iterative, multi-step research tasks. This project demonstrates a production-ready implementation of a cyclic graph architecture (LangGraph) utilizing the Groq API for high-throughput inference. The resulting system achieves professional-grade research synthesis with a marginal operating cost of $0.005 per query, proving that high-performance autonomous agents can be built cost-effectively using open-weight models. 1. System Overview: The Deep Research Agent The Deep Research Agent is not merely a wrapper for an LLM; it is a stateful application that mimics the workflow of a human analyst. Unlike zero-shot querying, this system employs an iterative “thought-loop” to refine information quality before generating a final response. Core Capabilities: 2. Technical Stack & Design Choices The architecture was chosen to maximize architectural flexibility while minimizing inference latency and operational costs. 3. Architectural Analysis: Cyclic Graph vs. Linear Chains A key engineering decision in this project was the implementation of a Cyclic Graph architecture over a traditional Linear Chain. 4. Engineering Implementation & Challenges The development process highlighted several critical aspects of building production-grade agents. A. State Management Implementation Effective state management is the backbone of any agentic system. I implemented a TypedDict structure with reducer operators to maintain context across iterations. This ensures that research findings are accumulated rather than overwritten during loops. Python B. Resilience and Error Handling To ensure robustness suitable for automated tasks, I implemented exponential backoff strategies for all external API calls. This prevents cascade failures during momentary latency spikes from search or LLM providers. Python C. Resource Optimization (Cost Analysis) A primary objective was to demonstrate the economic feasibility of running autonomous agents at scale. By optimizing the system prompt and pruning search results (limiting context window usage), the system achieves a 95% cost reduction compared to proprietary model APIs (e.g., GPT-4). Metric Standard API approach Deep Research Agent (Optimized) Cost Per Query ~$0.10 **~$0.005** Latency Variable < 3s (Inference) Architecture Black Box Open / Customizable 5. Conclusion & Future Scope This project validates that professional-grade AI agents do not require prohibitive budgets or closed ecosystems. By leveraging LangGraph for sophisticated orchestration and Groq for high-speed inference, I have engineered a system that is both autonomous and economically scalable. Future Research Directions: Repository: github.com/kazisalon/Deep-Research-Agent